The StableDiffusion animated version is online! Support multiple input methods for text, images, and videos

The West Wind Originates from Aofei TempleQuantum bit | official account QbitAIStableDiffusion can also be usedGenerate VideoThat's it!You heard me right, StabilityAI has launched a new text generation animation toolkit called StableAnimationSDK, which supports multiple input methods such as text, text+initial image, and text+video.Users can call all StableDiffusion models, including StableDiffusion2

The West Wind Originates from Aofei Temple

Quantum bit | official account QbitAI

StableDiffusion can also be usedGenerate VideoThat's it!

You heard me right, StabilityAI has launched a new text generation animation toolkit called StableAnimationSDK, which supports multiple input methods such as text, text+initial image, and text+video.

Users can call all StableDiffusion models, including StableDiffusion2.0 and StableDiffusionXL, to generate animations.

Once the powerful features of the StableAnimationSDK were demonstrated, netizens exclaimed:

That's it!

Currently, StabilityAI is suspected to be optimizing the technology of this new tool and will soon release the source code of the components that drive the animation API.

3D manga photography style, automatically generated for unlimited duration

The StableAnimationSDK can supportThree typesHow to create an animation:

1. Text to AnimationUser inputs text prompt and adjusts various parameters to generate animation (similar to StableDiffusion).

2. Text input+initial image inputThe user provides an initial image as the starting point for the animation. Combining images with text prompts to generate the final output animation.

3. Video input+text inputThe user provides an initial video as the basis for the animation. Generate the final output animation based on the text prompt by adjusting various parameters.

Stable Animation SDKGenerate Video

After StabilityAI released the StableAnimation SDK, many netizens shared their testing results. Let's take a look together:

The StableAnimationSDK can set many parameters, such as steps, sampler, scale, and seed.

And there are so many down therePreset styleOptional:

3D model, simulation film, animation, film, comic book, digital art, enhanced fantasy art, equidistant projection, line draft, low polygon, plastic clay, neon punk, origami, photography, pixel art.

Currently, the use of animation function APIs is billed based on points,10 dollarsCan earn 1000 points.

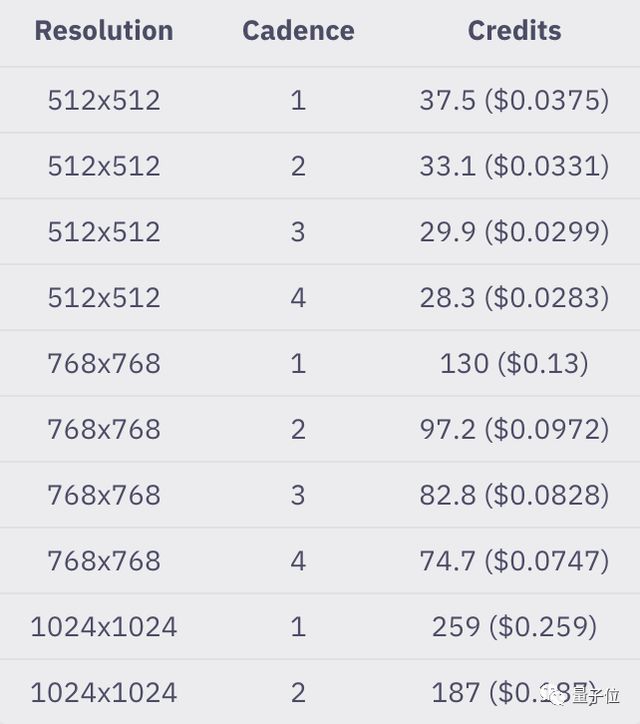

Using the StableDiffusionv1.5 model, generating 100 frames (approximately 8 seconds) of video at the default settings (512x512 resolution, 30 steps) will consume37.5 points

1Cadence1CadenceCadenceCadence 1:1

The official also provided an example, which shows that the use of points varies with the Cadence value of a standard still image (512x512/768x768/1024x1024, 30 steps) that generates 100 frames of standard animation:

Generate Video

We understand both the effect and price, so how to install and call the API?

To create animations and test the functionality of the SDK, you only need two steps to run the user interface:

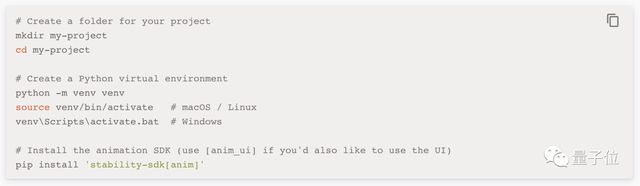

When developing applications, it is necessary to first set up a Python virtual environment and install the AnimationSDK in it:

The specific user manual is at the end of the text!

The increasingly popular video generation

AIGen-2That's it!

The update of Gen-2 brings eight major features in one go:

+StoryboardMask

Ammaar ReshiChatGPTMidJourneyAI

Stable DiffusionGoogle Colab

Like the foreign video special effects team Corridor, they trained AI based on StableDiffusion, ultimately enabling AI to convert live videos into animated versions

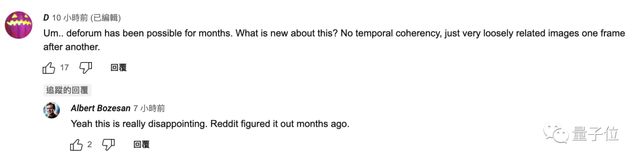

While everyone is excited about the emergence of new tools, some netizens also question the effectiveness of the videos generated by the StableAnimation SDK:

deforum

So have you played with these tools yet? How do you feel about the effect?

Portal:

https://platform.stability.ai/docs/features/animation/using (StableAnimationSDK User Manual)

Reference link:

[1] https://www.youtube.com/watch?v=xsoMk1EJoAY

[2] https://twitter.com/_akhaliq/status/1656693639085539331

[3] https://stability.ai/blog/stable-animation-sdk

Tag: The StableDiffusion animated version is online Support multiple input

Disclaimer: The content of this article is sourced from the internet. The copyright of the text, images, and other materials belongs to the original author. The platform reprints the materials for the purpose of conveying more information. The content of the article is for reference and learning only, and should not be used for commercial purposes. If it infringes on your legitimate rights and interests, please contact us promptly and we will handle it as soon as possible! We respect copyright and are committed to protecting it. Thank you for sharing.