Baichuan Intelligent Promotion Model Baihuan2-192K: Can input 350000 words at once to surpass Claude2

Lei Diwang Lotte October 30thBaichuan Intelligent released the Baihuan2-192K large model today. Its context window has a length of up to 192K, making it the longest context window in the world at present

Lei Diwang Lotte October 30th

Baichuan Intelligent released the Baihuan2-192K large model today. Its context window has a length of up to 192K, making it the longest context window in the world at present.

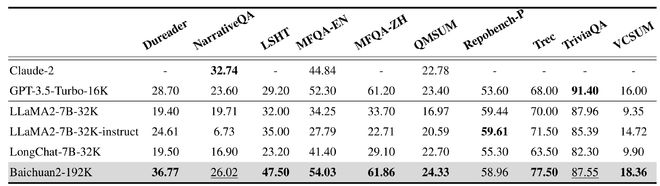

Baichuan Intelligent pointed out that Baihuan2-192K can process about 350000 Chinese characters, which is 4.4 times the best large model Claude2 currently supporting long context windows (supporting 100K context windows, with a measured value of about 80000 words) and 14 times the GPT-4 (supporting 32K context windows, with a measured value of about 25000 words). Baihuan2-192K not only surpasses Claude2 in terms of context window length, but also leads Claude2 in terms of long window text generation quality, long context understanding, long text Q&A, and abstract performance.

On September 25, 2023, Baichuan Intelligent has opened the API interface of Baihuan2, officially entering the enterprise market and starting the commercialization process. This time, Baihuan2-192K will be provided to enterprise users through API calls and private deployment. Currently, Baichuan Intelligent has launched the API internal testing of Baihuan2-192K, which is open to core partners in the legal, media, finance and other industries.

10 long text evaluations, 7 obtained SOTA, claimed to be ahead of Claude2

It is reported that context window length is one of the core technologies of large models. Through a larger context window, the model can combine more contextual content to obtain richer semantic information, better capture contextual relevance, eliminate ambiguity, and generate content more accurately and smoothly, improving the model's ability.

In addition, LongEval's evaluation results show that Baichuan 2-192K can still maintain good performance even after the window length exceeds 100K.

LongEval is a rating list for long window models released by the University of California, Berkeley, in collaboration with other universities. It is mainly used to measure the model's ability to remember and understand long window content, and is recognized as an authoritative rating list for understanding long context windows in the industry.

Dynamic sampling based position encoding optimization, 4D parallel distributed solution

Expanding the context window can effectively improve the performance of large models is a consensus in the artificial intelligence industry, but ultra long context windows mean higher computational power requirements and greater visual memory pressure. Currently, there are many ways in the industry to increase the length of context windows, including sliding windows, downsampling, small models, and so on. Although these methods can improve the length of the context window, they all have varying degrees of damage to the model's performance. In other words, they all sacrifice the performance of other aspects of the model in exchange for a longer context window.

The Baihuan2-192K released by Baichuan this time achieved a balance between window length and model performance through extreme optimization of algorithms and engineering, achieving synchronous improvement of window length and model performance.

In terms of algorithms, Baichuan Intelligent has proposed an extrapolation scheme for dynamic position encoding of RoPE and ALiBi. This scheme can perform varying degrees of Attention mask dynamic interpolation on different lengths of ALiBi position encoding, while ensuring resolution and enhancing the model's modeling ability for long sequence dependencies. On the long text confusion standard evaluation data PG-19, as the window length expands, the sequence modeling ability of Baichun2-192K continues to enhance.

(PG-19 is a language modeling benchmark dataset released by DeepMind and is recognized as the industry's evaluation standard for measuring long-range memory inference problems in models.)

In terms of engineering, based on the self-developed distributed training framework, Baichuan Intelligent integrates all advanced optimization technologies in the current market, including tensor parallelism, pipeline parallelism, sequence parallelism, recalculation, and offload functions, and has created a comprehensive 4D parallel distributed solution. This scheme can automatically find the most suitable distributed strategy based on the specific load situation of the model, reducing the memory occupation during long window training and inference processes.

Baichuan 2-192K officially launched internal testing

Baichuan Intelligent stated that Baihuan2-192K has officially started internal testing and is open to its core partners through API calls. It has reached cooperation with financial media and law firms to apply Baihuan2-192K's globally leading long-term contextual capabilities to specific scenarios such as media, finance, and law, and will soon be fully open.

After fully opening the API, Baihuan2-192K can be deeply integrated with more vertical scenarios, truly playing a role in people's work, life, and learning, helping industry users better reduce costs and increase efficiency. Baihuan2-192K can process and analyze hundreds of pages of materials at once, providing assistance in extracting and analyzing key information from long documents, as well as in real scenarios such as long document abstracts, long document reviews, long article or report writing, and complex programming assistance.

Baichuan Intelligence pointed out that it can help fund managers summarize and interpret financial statements, analyze the company's risks and opportunities; Assist lawyers in identifying risks in multiple legal documents, reviewing contracts and legal documents; Assist technical personnel in reading hundreds of pages of development documents and answering technical questions; It can also help staff quickly browse a large number of papers and summarize the latest cutting-edge developments.

A longer context also provides underlying support for better processing and understanding complex multimodal inputs, as well as achieving better transfer learning. This will lay a solid technical foundation for the industry to explore cutting-edge fields such as agents and multimodal applications.

Lei Di was founded by media person Lei Jianping. If reprinted, please specify the source.

Tag: Baichuan Intelligent Promotion Model Baihuan2-192K Can input 350000 words

Disclaimer: The content of this article is sourced from the internet. The copyright of the text, images, and other materials belongs to the original author. The platform reprints the materials for the purpose of conveying more information. The content of the article is for reference and learning only, and should not be used for commercial purposes. If it infringes on your legitimate rights and interests, please contact us promptly and we will handle it as soon as possible! We respect copyright and are committed to protecting it. Thank you for sharing.