Multi modal large model illusion reduced by 30%! China University of Science and Technology and others proposed the first illusion correction architecture "Woodpecker" woodpecker

Xinzhiyuan ReportEditor: So sleepyIntroduction to New Intelligence ElementRecently, researchers from institutions such as China University of Science and Technology have proposed the first multimodal modification architecture, "Woodpecker," which can effectively solve the problem of MLLM output hallucinations.Visual hallucination is a typical problem commonly encountered in multimodal large language models (MLLMs)

Xinzhiyuan Report

Editor: So sleepy

Introduction to New Intelligence ElementRecently, researchers from institutions such as China University of Science and Technology have proposed the first multimodal modification architecture, "Woodpecker," which can effectively solve the problem of MLLM output hallucinations.

Visual hallucination is a typical problem commonly encountered in multimodal large language models (MLLMs).

Simply put, the description output by the model does not match the image content.

The following image shows two types of illusions, with the red part mistakenly describing the dog's color (attribute illusion) and the blue part describing something that does not actually exist in the image (target illusion).

Hallucinations have a significant negative impact on the reliability of models, which has attracted the attention of many researchers.

The previous methods mainly focused on MLLM itself, by improving the training data and architecture to train a new MLLM through fine-tuning.

However, this approach incurs significant data construction and training costs, and is difficult to generalize to various existing MLLMs.

Recently, researchers from institutions such as China University of Science and Technology have proposed a universal plug-and-play architecture that does not require trainingWoodpeckerResolve the issue of MLLM output hallucinations through correction.

Paper address: https://arxiv.org/pdf/2310.16045.pdf

Project address: https://github.com/BradyFU/Woodpecker

Effect display

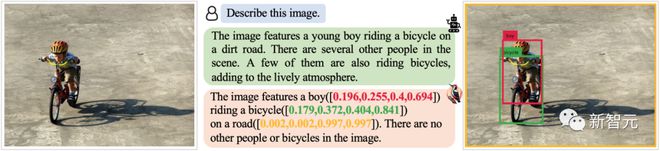

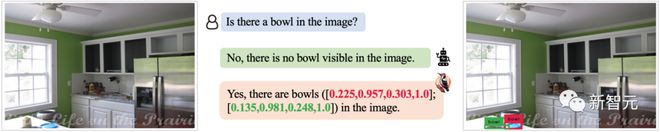

Specifically, Woodpicker can correct the illusion of model output in various scenarios and output detection boxes as citations to indicate the existence of corresponding targets.

For example, when faced with descriptive tasks, Woodpecker can correct the parts with hallucinations:

Woodpecker can also accurately correct small objects that are difficult to detect in MLLM:

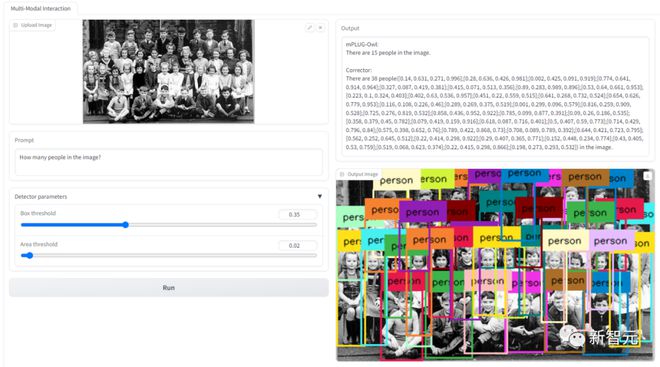

Faced with complex counting scenarios that MLLM is difficult to solve, Woodpecker can also solve:

Woopecker also handles the illusion problem of target attribute classes well:

In addition, Woodpecker also provides Demos for readers to test and use.

As shown in the following figure, by uploading the image and entering the request, you can obtain the pre and post correction model responses, as well as new images for reference and verification.

method

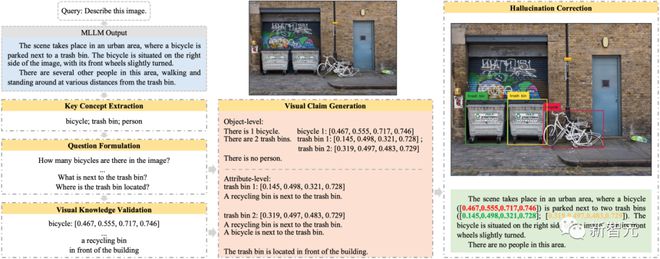

The architecture of Woodpecker is as follows, which includes five main steps: key concept extraction, problem construction, visual knowledge verification, visual assertion generation, and illusion correction.

-Key Concept Extraction

The key concept refers to the existence targets that are most likely to have hallucinations in the output of MLLM, such as "bicycles; trash cans; people" described in the above image.

We can use the Prompt big language model to extract these key concepts, which are the foundation for subsequent steps.

-Problem Construction

Around the key concepts extracted in the previous step, the Prompt big language model is used to propose some questions that can help verify the authenticity of image descriptions, such as "How many bicycles are there in the picture?", "What is next to the trash can?", and so on.

-Visual knowledge testing

Use visual basic models to test the proposed questions and obtain information related to images and descriptive text.

For example, we can use GroundingDINO for object detection to determine whether key targets exist and the number of key targets. Because visual basic models such as GroundingDINO have a stronger ability to perceive images than MLLM itself.

For attribute questions such as target color, BLIP-2 can be used to answer them. Traditional VQA models such as BLIP-2 have limited length of output answers and fewer hallucination problems.

-Visual Assertion Generation

Based on the problems obtained in the first two steps and the corresponding visual information, synthesize a structured 'visual assertion'. These visual assertions can be seen as a visual knowledge base related to the original MLLM responses and input images.

-Hallucination correction

Based on the previous results, a large language model is used to modify the text output of MLLM one by one, and the detection box information corresponding to the target is provided as a reference for visual inspection.

experimental result

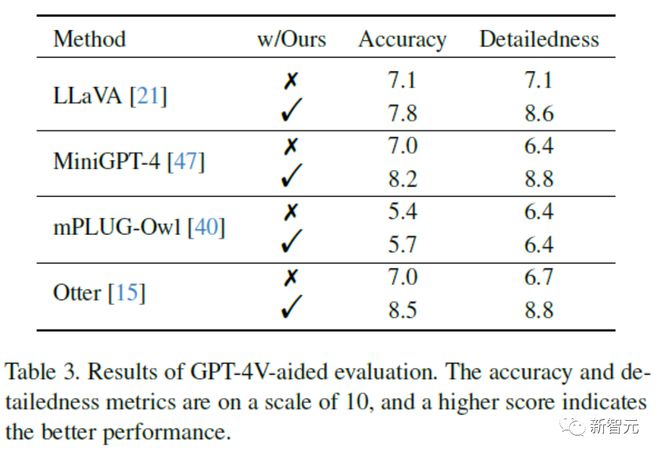

Several typical MLLMs were selected as baselines for the experiment, including LLaVA, mPLUG-Owl, Otter, and MiniGPT-4.

WoodpeckerPOPEexperimental result

The results indicate that applying Woodpecker correction on different MLLM results in varying degrees of improvement.

Under random setting, Woodpecker improved the accuracy indicators of MiniGPT-4 and mPLUG-Owl by 30.66% and 24.33%, respectively.

In addition, the researchers also applied a more comprehensive validation set MME to further test Woodpecker's ability to correct attribute hallucinations. The results are shown in the table below:

From the table, it can be seen that Woodpecker is not only effective in dealing with target hallucinations, but also has excellent performance in correcting color and other attribute hallucinations. The color score of LLaVA has significantly increased from 78.33 points to 155 points!

After Woodpecker correction, the total scores of the four baseline models on all four test subsets exceeded 500 points, indicating a significant improvement in overall perception ability.

To measure correction performance more directly, a more direct approach is to use open evaluation.

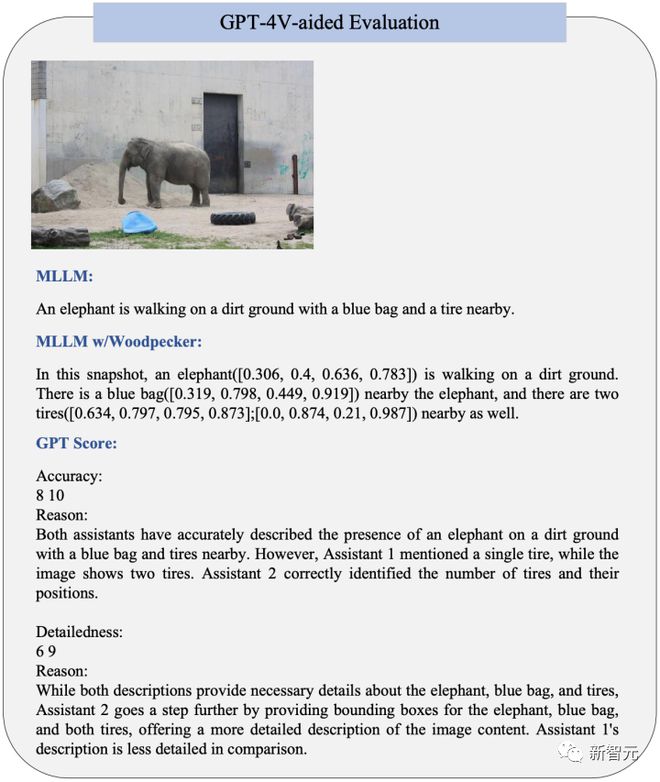

Unlike the previous approach of translating images into plain text GPT-4, this article utilizes the recently opened visual interface of OpenAI and proposes using GPT-4 (Vision) to directly score the following two dimensions for pre and post correction image descriptions:

-Accuracy: Whether the model's response is accurate relative to the image content

-Level of detail: The richness of detail in the model response

experimental result :

The results indicate that the accuracy of image description has been improved after Woodpecker correction, indicating that the framework can effectively correct the hallucinations in the description.

On the other hand, the location information introduced by Woodpecker after modification enriches the text description and provides further location information, thereby improving the richness of details.

The GPT-4V assisted evaluation example is shown in the following figure:

Interested readers can read the paper to learn more about the content.

References:

https://arxiv.org/pdf/2310.16045.pdf

https://github.com/BradyFU/Woodpecker

Tag: illusion and Multi modal large model reduced by China

Disclaimer: The content of this article is sourced from the internet. The copyright of the text, images, and other materials belongs to the original author. The platform reprints the materials for the purpose of conveying more information. The content of the article is for reference and learning only, and should not be used for commercial purposes. If it infringes on your legitimate rights and interests, please contact us promptly and we will handle it as soon as possible! We respect copyright and are committed to protecting it. Thank you for sharing.