Little Zhahao Bets Big Model! Meta Heavily Launches Customized Chip MTIAv1 and New Supercomputing

Xinzhiyuan ReportEditor: Layan AeneasIntroduction to New Intelligence ElementThe whole world is rolling up big models, and Xiaozha is also in a hurry. Nowadays, Meta has made big bets on custom chips and supercomputers in order to develop AI

Xinzhiyuan Report

Editor: Layan Aeneas

Introduction to New Intelligence ElementThe whole world is rolling up big models, and Xiaozha is also in a hurry. Nowadays, Meta has made big bets on custom chips and supercomputers in order to develop AI.

Meta also has pure self-developed chips!

This Thursday, Meta released the first generation AI inference customization chip MTIAv1 and supercomputing.

It can be said that MTIA is a huge blessing for Meta, especially now that various companies are expanding their models, and the demand for AI computing power is becoming increasingly high.

Ozawa recently said that Meta saw "an opportunity to introduce artificial intelligent agent to billions of people in a useful and meaningful way".

Obviously, as Meta increases its investment in AI, MTIA chips and supercomputing plans will be key tools for Meta to compete with other technology giants, and no giant is currently investing a lot of resources in AI.

As you can see, with customized chips and supercomputing, Meta has made a big bet on AI.

MTIA

In a recent online event, Meta kicked off the development of its own infrastructure.

The full name of the new chip is Meta Training and Reasoning Accelerator, abbreviated as MTIA.

MTIA is an ASIC, a chip that combines different circuits on a single board, allowing it to be programmed to perform one or more tasks in parallel.

In a blog article, Santosh Janardhan, Vice President and Infrastructure Manager of Meta, wrote that MTIA is Meta's "internal custom accelerator chip series for inference workloads," providing "higher computing power and efficiency" than CPUs and "customizing for our internal workloads.

By combining MTIA chips and GPUs, Janardhan stated that Meta believes that "we will provide better performance, lower latency, and higher efficiency for each workload

It has to be said that this is a projection of Meta's strength. In fact, Meta has not made rapid progress in applying AI friendly hardware systems. This affects the ability of Meta and competitors (such as Microsoft, Google, etc.) to maintain synchronous development.

Alexis Bjorlin, Vice President of Meta Infrastructure, stated in an interview that by building their own hardware, Meta has the ability to control every layer of the stack, from data center design to training frameworks.

This vertical integration level is essential for promoting AI research boundaries on a large scale.

In the past decade, Meta has spent billions of dollars hiring top data scientists to build new AI models.

Meta has also been striving to launch many of its more ambitious AI innovation research, especially generative AI.

Until 2022, Meta mainly used a combination of CPUs and chips designed specifically to accelerate AI algorithms to maintain its AI operations.

The combination of CPU and chip is usually less efficient than GPU in executing such tasks.

So Meta canceled the custom chips that were originally planned for large-scale promotion in 2022 and instead ordered billions of dollars worth of Nvidia GPUs.

Introducing these GPUs requires a disruptive redesign of several of Meta's data centers.

In order to reverse this situation, Meta plans to develop an internal chip, which is expected to be launched in 2025. This internal chip can both train AI models and run AI models, which can be described as having powerful performance.

The protagonist has finally arrived - the new chip is called MITA, also known as MetaTraining and Inference Accelerator

This chip can be used to accelerate the efficiency of AI training and reasoning.

The research team stated that MTIA is an ASIC, referring to a chip that combines different circuits on a single board. By programming, the chip can perform one or more tasks simultaneously.

AI chip Meta specifically designed for AI workloads

You should know that the competition among technology giants is simply about chips.

For example, Google's TPU is used to train Palm-2 and Imagen. Amazon also has its own chip for training AI models.

In addition, there are reports that Microsoft is also developing a chip called Athena with AMD.

No, the arrival of MITA is also a manifestation of Meta's unwillingness to be outdone.

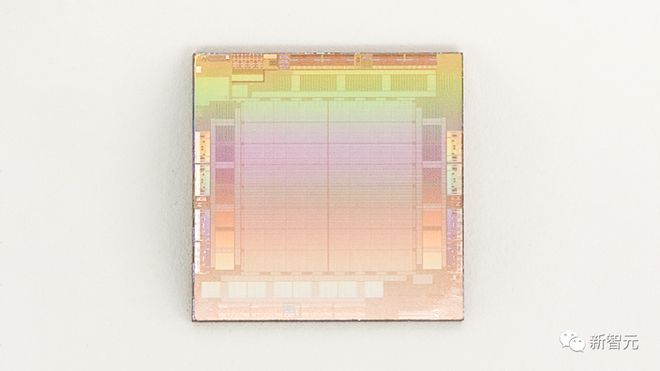

Meta stated that in 2020 they created the first generation of MITA - MITAv1, using a 7nm process.

The internal memory of the chip can be expanded from 128MB to 128GB, and in the benchmark testing of Meta design, MITA is more efficient than GPU in processing AI models with medium to low complexity.

There is still a lot of work to be done in the memory and network parts of the chip. With the increasing scale of AI models, MITA is also about to encounter bottlenecks. Meta needs to share the workload across multiple chips.

Regarding this, Meta stated that it will continue to improve the performance of MITA per watt when running the recommended workload.

As early as 2020, Meta had designed the first generation of MTIAASIC for internal workloads.

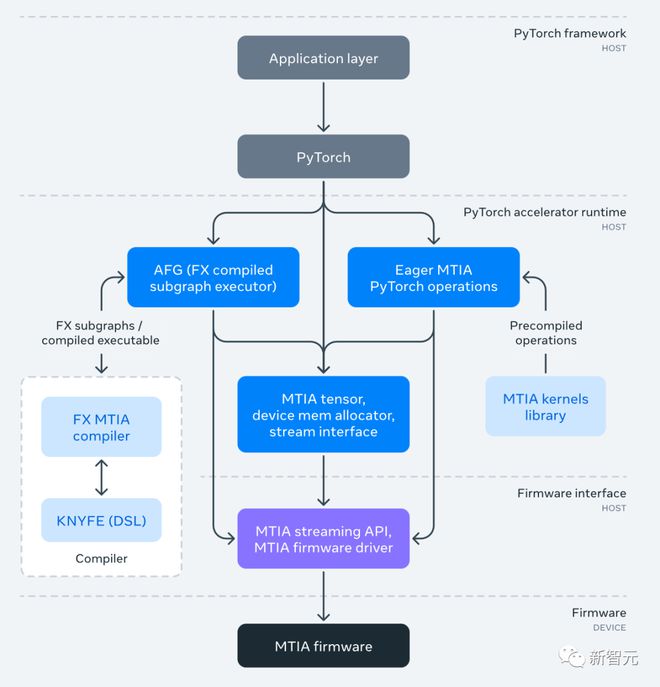

This inference accelerator is part of a jointly designed full stack solution that includes chips, PyTorch, and recommendation models.

The accelerator is manufactured using TSMC's 7nm process and operates at a frequency of 800MHz. It provides 102.4TOPS with INT8 accuracy and 51.2TFLOPS with FP16 accuracy. Its thermal design power (TDP) is 25W.

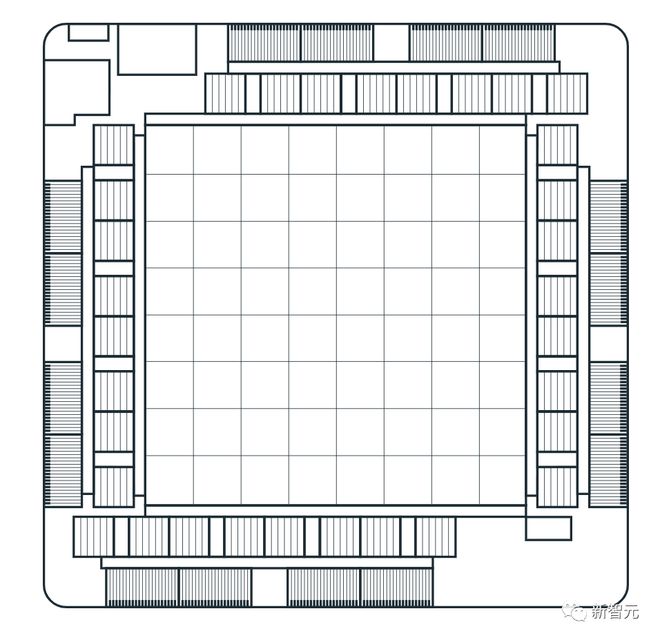

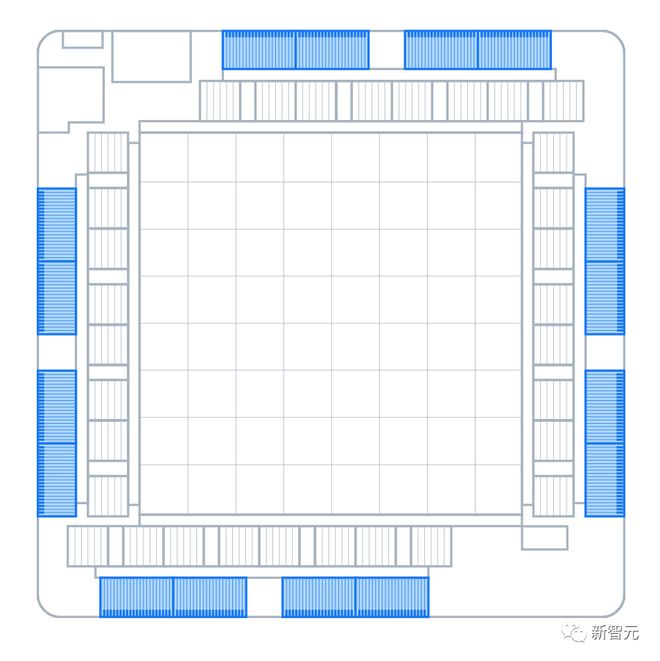

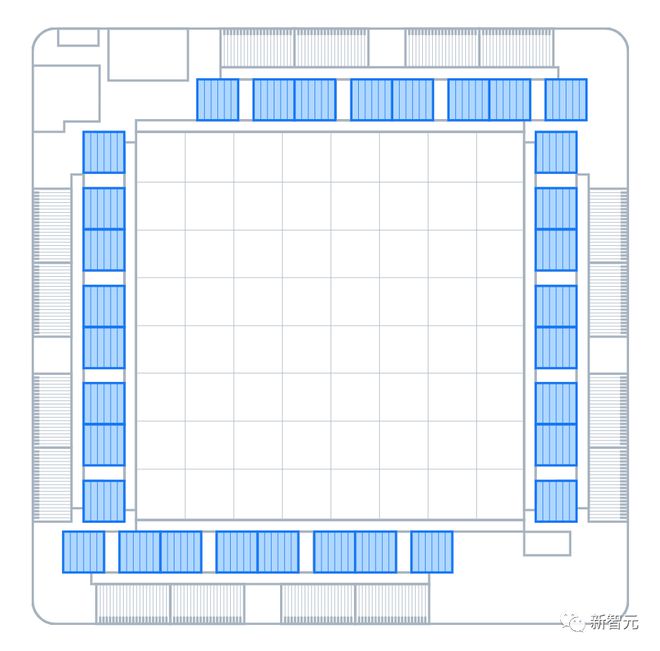

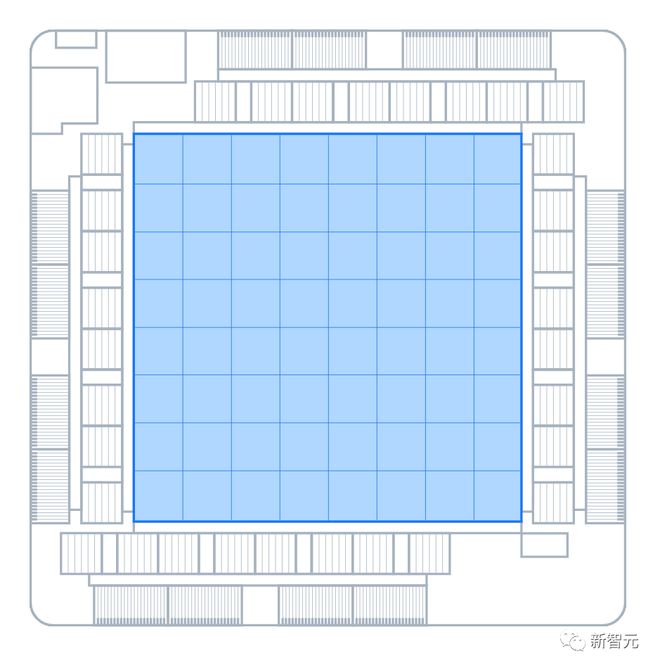

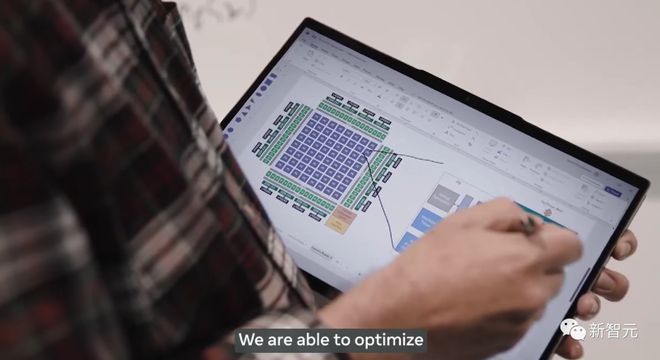

At a high level, accelerators consist of a grid composed of processing elements (PE), on chip and off chip memory resources, and interconnections

The accelerator is equipped with a dedicated control subsystem that runs system firmware. The firmware manages available computing and memory resources, communicates with the host through a dedicated host interface, and coordinates job execution on the accelerator.

The memory subsystem uses LPDDR5 for off chip DRAM resources, which can be expanded to 128GB

The chip also has a 128MB on-chip SRAM that is shared among all PEs, providing higher bandwidth and lower latency for frequently accessed data and instructions

The grid contains 64 PEs organized in an 8x8 configuration; PE connects to each other and memory blocks through a mesh network. A grid can be used to run an entire job, or it can be divided into multiple sub grids that can run independent jobs

The MTIA accelerator is installed on a small dual M.2 board, making it easier to aggregate into the server. These motherboards use a PCIeGen4x8 link to connect to the host CPU on the server, with a power consumption as low as 35W.

Example of a test board with MTIA

The MTIA software (SW) stack is designed to provide developers with efficiency and high performance. It is fully integrated with PyTorch, using PyTorch in conjunction with MTIA is as simple as using PyTorch for CPUs or GPUs.

PyTorch runtime for MTIA manages execution and functions on the device, such as MTIA tensors, memory management, and APIs for scheduling operators on accelerators.

MTIA software stack

There are multiple ways to create computing kernels that can run on accelerators, including using PyTorch, C/C++(for manually tuned, highly optimized kernels), and a new domain specific language called KNYFE.

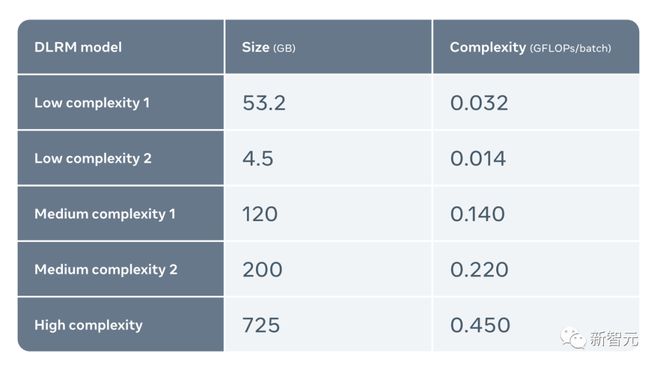

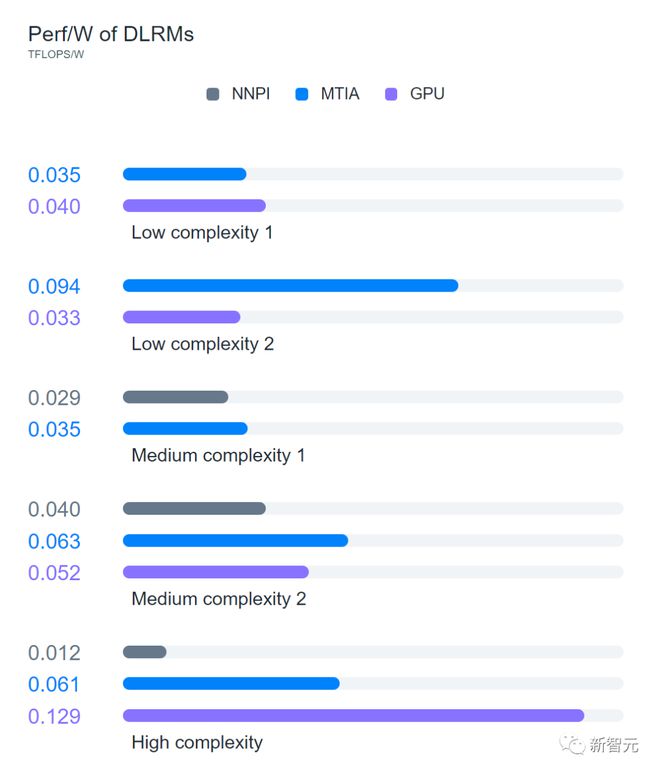

Evaluate MTIA for representative production workloads using five different DLRMs (from low complexity to high complexity)

The evaluation found that MTIA is more effective in handling low complexity (LC1 and LC2) and medium complexity (MC1 and MC2) models compared to NNPI and GPU. Researchers also recognize that they have not yet optimized MTIA for high complexity (HC) models

However, the MTIA chip seems to have a long way to go - according to media reports, it will not be available until 2025.

RSC

Perhaps one day in the future, Meta can delegate most of the training and running of AI to MITA to complete.

But for now, we still have to rely more on our own supercomputers: ResearchSuperCluster, also known as RSC.

RSC made its debut in January 2022 and has completed the second phase of construction in collaboration with PenguinComputing, Nvidia, and PureStorage for assembly.

Currently, RSC includes 2000 NVIDIA DGXA100 systems and 16000 NVIDIA A100GPUs.

At its best, Meta achieved nearly 5 exaflops of computing power (one exaflop is quintillion per second, or billions of times).

As the number of GPUs allocated increases, training time can be greatly reduced. In the past year, Meta has utilized this massive scale to train some influential projects

Previously, Meta had been committed to building "next-generation data center design", striving to "optimize AI" and "build faster and more cost-effective".

Janardhan said that Meta is very confident in the powerful capabilities of the Super Cluster (RSC) artificial intelligence supercomputer, and we believe it is one of the fastest artificial intelligence supercomputers in the world.

So the question arises, why did Meta build such a supercomputer for internal use?

Firstly, other technology giants are putting too much pressure on them. A few years ago, Microsoft collaborated with OpenAI to create an AI supercomputer. Recently, they have expressed their intention to collaborate with AMD to build a new AI supercomputer in the Azure cloud.

In addition, Google has been touting its AI focused supercomputer with 26000 NvidiaH100GPUs, completely crushing Meta.

Of course, in addition to this reason, Meta also stated that RSC allows Meta's researchers to train models using real cases from their company's production system.

This is different from the company's previous artificial intelligence infrastructure, which only utilizes open source and publicly available datasets.

RSCAI supercomputers are used to drive AI research boundaries across multiple fields, including generative AI. Meta hopes to provide AI researchers with the most advanced infrastructure to develop models and provide them with a training platform to advance the development of AI.

At its peak, RSC can achieve nearly 5 exaflops of computing power, which the company claims makes it one of the world's fastest computing capabilities, far surpassing many of the world's fastest supercomputers.

Meta stated that it will use RSC to train LLaMA.

Meta stated that the largest LLaMA model was trained on 2048 A100GPUs, which took 21 days.

As Meta attempts to stand out in the increasingly fierce artificial intelligence plans of other technology giants, it is clear that Meta also needs to have a layout for AI hardware.

In addition to MTIA, Meta is also developing another chip to handle specific types of computing workloads.

This chip, known as Meta Scalable Video Processor (MSVP), is the first ASIC solution developed internally by Meta, designed specifically to meet the processing needs of video on demand and real-time streaming.

A few years ago, Meta began conceptualizing customized server-side video chips and announced the launch of ASICs for video transcoding and inference work in 2019.

Meta's custom chips aim to accelerate the processing speed of video work, such as streaming and transcoding.

Meta researchers stated that "in the future, MSVP will enable us to support more of Meta's most important use cases and requirements, including short videos - which can efficiently deliver AI, AR/VR, and other metaverse related content

Chasing after the rushing Meta

If there is one thing these products have in common today, it is that Meta is desperately trying to accelerate its involvement in artificial intelligence, especially generative AI.

In February of this year, Xiao Zha expressed his intention to establish a new top-level generative AI team.

In his words, it is to give the company's research and development a wave of nitrogen gas acceleration.

Chief scientist Yann LeCun stated that Meta plans to deploy AI generation tools to continue expanding its vision in virtual reality.

Currently, Meta is exploring chat experiences in WhatsApp and Messenger, visual creation tools in Facebook, Instagram, and advertising, as well as video and multimodal experiences.

However, to some extent, Meta also feels the increasing pressure from investors, who are worried that Meta's development speed is not fast enough to occupy the market of generative AI.

For chat robots like Bard, BingChat, or ChatGPT, Meta is struggling to cope. There has also been little progress in image generation.

The latter is another key area of explosive growth.

If the predictions of relevant experts are correct, the total potential market for generative AI software may reach $150 billion.

Goldman Sachs predicts that it will increase GDP by 7%.

Even a small portion of it can eliminate the billions of dollars lost by Meta in metaverse technology investments such as AR/VR headsets and conference software.

According to a report released by RealityLabs, Meta's department responsible for augmented reality technology, Meta had a net loss of $4 billion in the previous quarter.

reference material:

https://ai.facebook.com/blog/meta-training-inference-accelerator-AI-MTIA/

https://ai.facebook.com/blog/supercomputer-meta-research-supercluster-2023/

https://ai.facebook.com/blog/meta-ai-infrastructure-overview/

Tag: Little Zhahao Bets Big Model Meta Heavily Launches Customized

Disclaimer: The content of this article is sourced from the internet. The copyright of the text, images, and other materials belongs to the original author. The platform reprints the materials for the purpose of conveying more information. The content of the article is for reference and learning only, and should not be used for commercial purposes. If it infringes on your legitimate rights and interests, please contact us promptly and we will handle it as soon as possible! We respect copyright and are committed to protecting it. Thank you for sharing.